Screen predictors

Preview the tutorial

Watch this video to preview the steps in this tutorial. There might

be slight differences in the user interface that is shown in the video. The video is intended to be

a companion to the written tutorial. This video provides a visual method to learn the concepts and

tasks in this documentation.

Watch this video to preview the steps in this tutorial. There might

be slight differences in the user interface that is shown in the video. The video is intended to be

a companion to the written tutorial. This video provides a visual method to learn the concepts and

tasks in this documentation.

Try the tutorial

In this tutorial, you will complete these tasks:

Sample modeler flow and data set

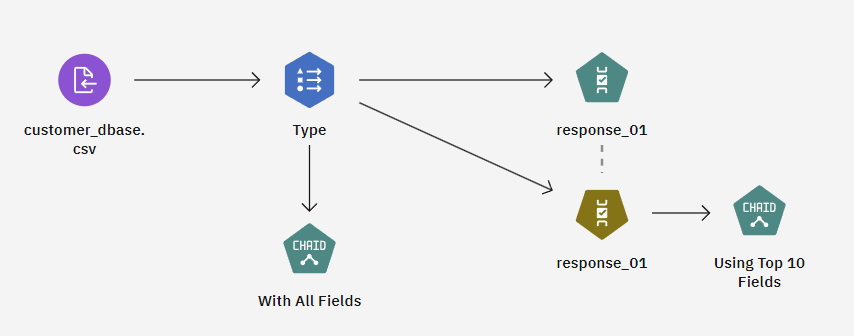

This tutorial uses the Screening Predictors flow in the sample project. The data file used is customer_dbase.csv. The following image shows the sample modeler flow.

- Without feature selection. All predictor fields in the dataset are used as inputs to the CHAID tree.

- With feature selection. The Feature Selection node is used to select the best 10 predictors. These predictors are input into the CHAID tree.

By comparing the two resulting tree models, you can see how feature selection can produce effective results.

Task 1: Open the sample project

The sample project contains several data sets and sample modeler flows. If you don't already have the sample project, then refer to the Tutorials topic to create the sample project. Then follow these steps to open the sample project:

- In Cloud Pak for Data, from the Navigation menu

, choose Projects > View all Projects.

- Click SPSS Modeler Project.

- Click the Assets tab to see the data sets and modeler flows.

Check your progress

The following image shows the project Assets tab. You are now ready to work with the sample modeler flow associated with this tutorial.

Task 2: Examine the Data Asset and Type nodes

Screening Predictors includes several nodes. Follow these steps to examine the Data Asset and Type nodes:

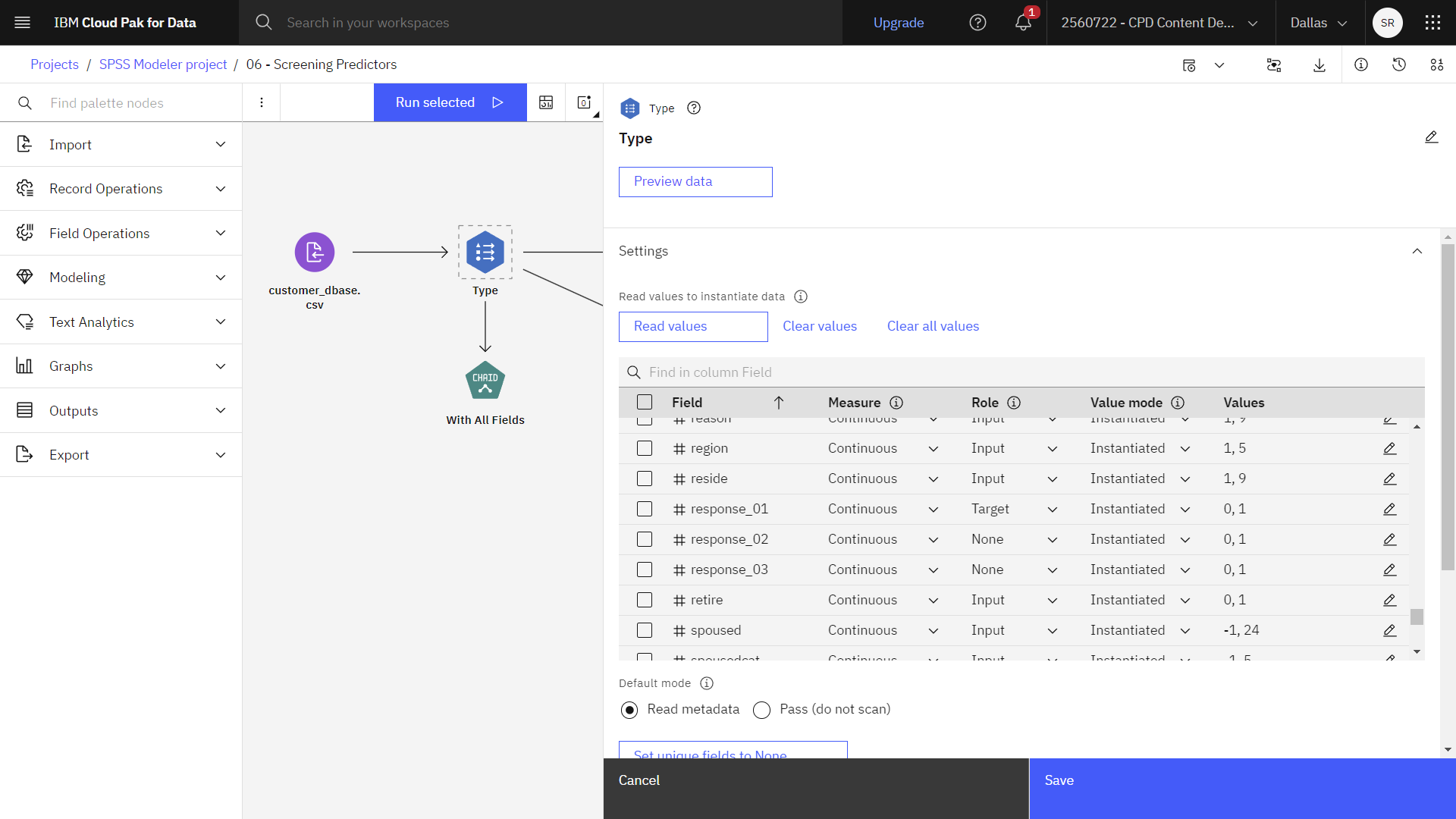

- From the Assets tab, open the Screening Predictors modeler flow, and wait for the canvas to load.

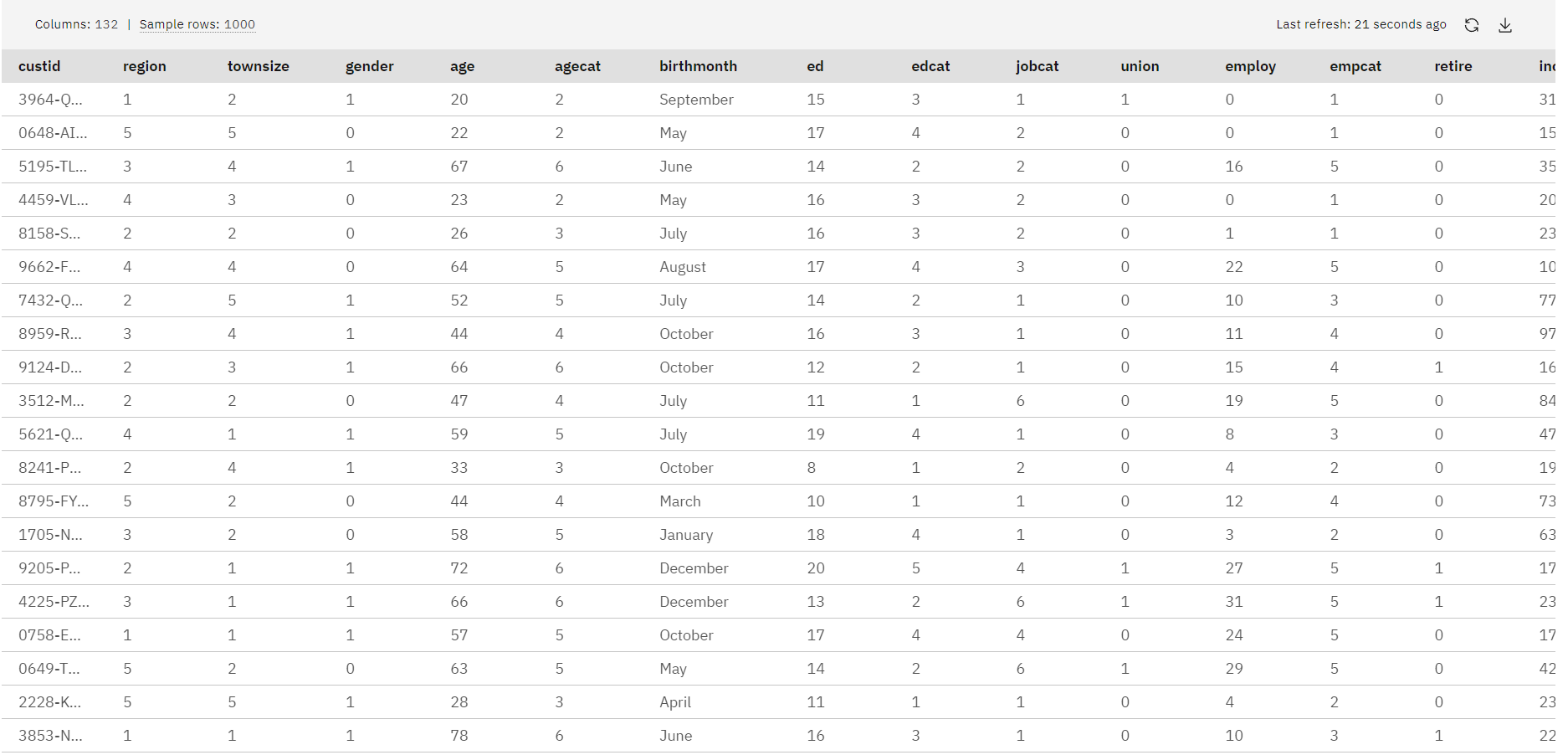

- Double-click the customer_dbase.csv node. This node is a Data Asset node that points to the customer_dbase.csv file in the project.

- Review the File format properties.

- Optional: Click Preview data to see the full data set.

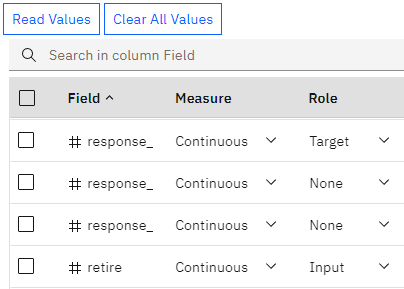

- Double-click the Type node. Notice the Role value for each of these fields:

- response_01 is set to Target

- response_02, response_03, and custid are set to None

- All other fields are set to Input

Figure 3. Type node measurement levels

- Click Read Values.

- Optional: Click Preview data to see the data set with the Type properties applied.

- Click Save.

Check your progress

The following image shows the Type node. You are now ready to build the model.

Task 3: Build the model

Follow these steps to build the model:

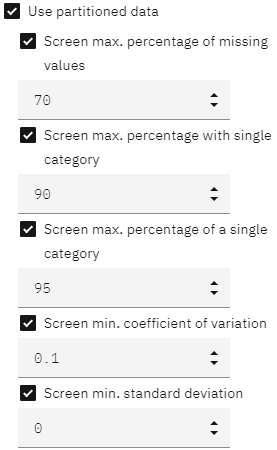

- Double-click the response_01 (Feature Selection) node to see its properties.

- Expand the Build Options section to see the defined rules and criteria that are used for

screening or disqualifying fields.

Figure 4. Feature Selection Build Options

- Hover over the response_01 (Feature Selection) node, and click the Run icon

.

- In the Outputs and models pane, click the model with the name response_01 to view

the model. The results show the fields that are found to be useful in the prediction, ranked by

importance. By examining these fields, you can decide which ones to use in subsequent modeling

sessions.

To compare results without feature selection, you must use two CHAID modeling nodes in the flow: one that uses feature selection and one that doesn't.

- Double-click the With All Fields (CHAID) node to see its properties.

- Under Objectives, verify that Build new model and Create a standard model are selected.

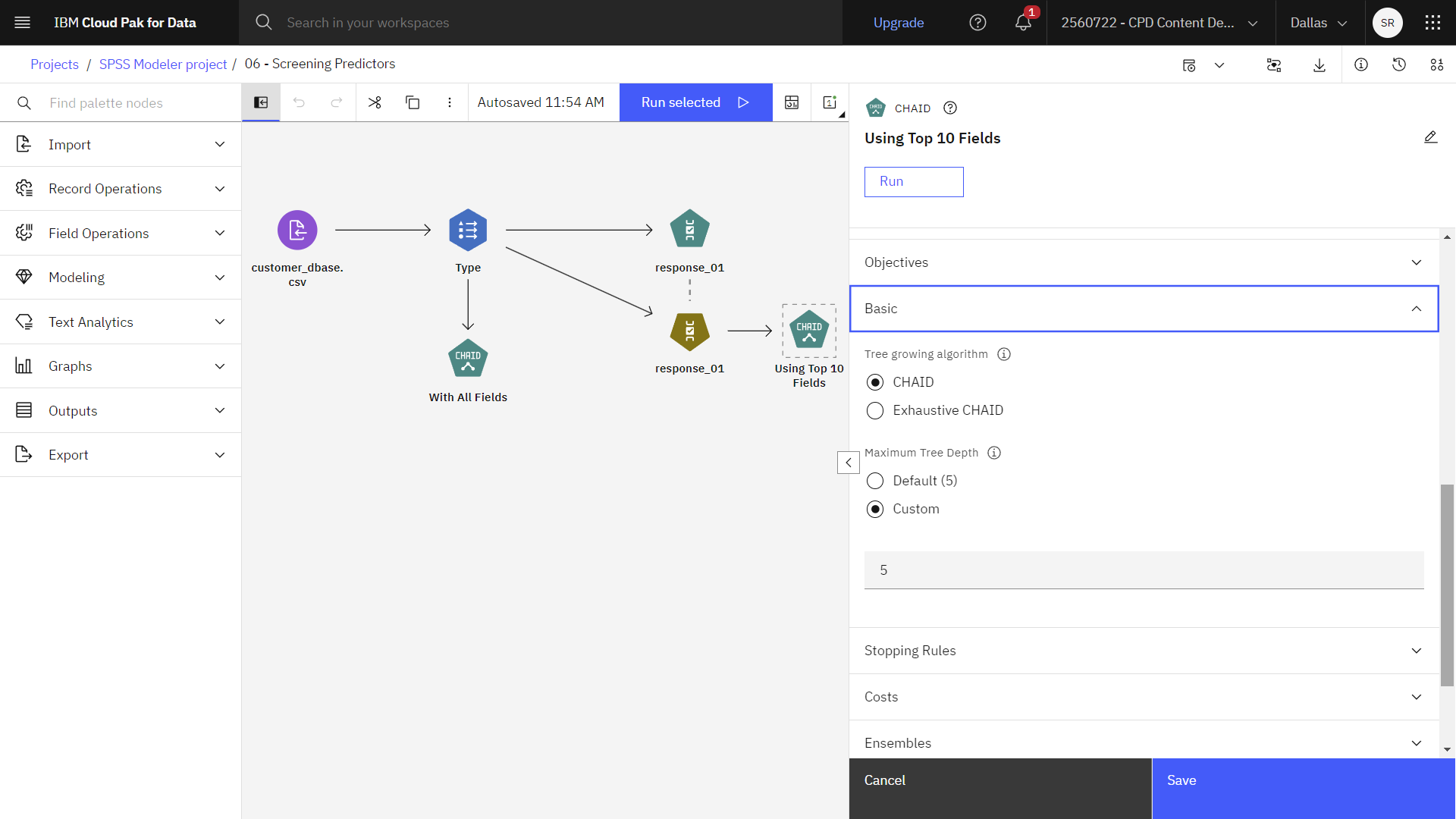

- Expand the Basic section, and verify that Maximum Tree Depth is set to

Custom and the number of levels is set to

5.

- Click Save.

- Double-click the Using Top 10 Fields (CHAID) node to see its properties

- Verify the same properties as the With All Fields (CHAID) node.

- Click Save.

Check your progress

The following image shows the Modeling node. You are now ready to run the flow and view the results.

Task 4: Run the flow and view the results

Follow these steps to run the flow and view the results of the two models with and without feature selection:

- Click Run all

. As it runs, notice how long it takes each model to finish building.

- In the Outputs and models pane, click the model with the name With All fields to

view the results.

- Click the Tree Diagram page.

- Zoom out to see the scope of the tree diagram.

- Close the model details window.

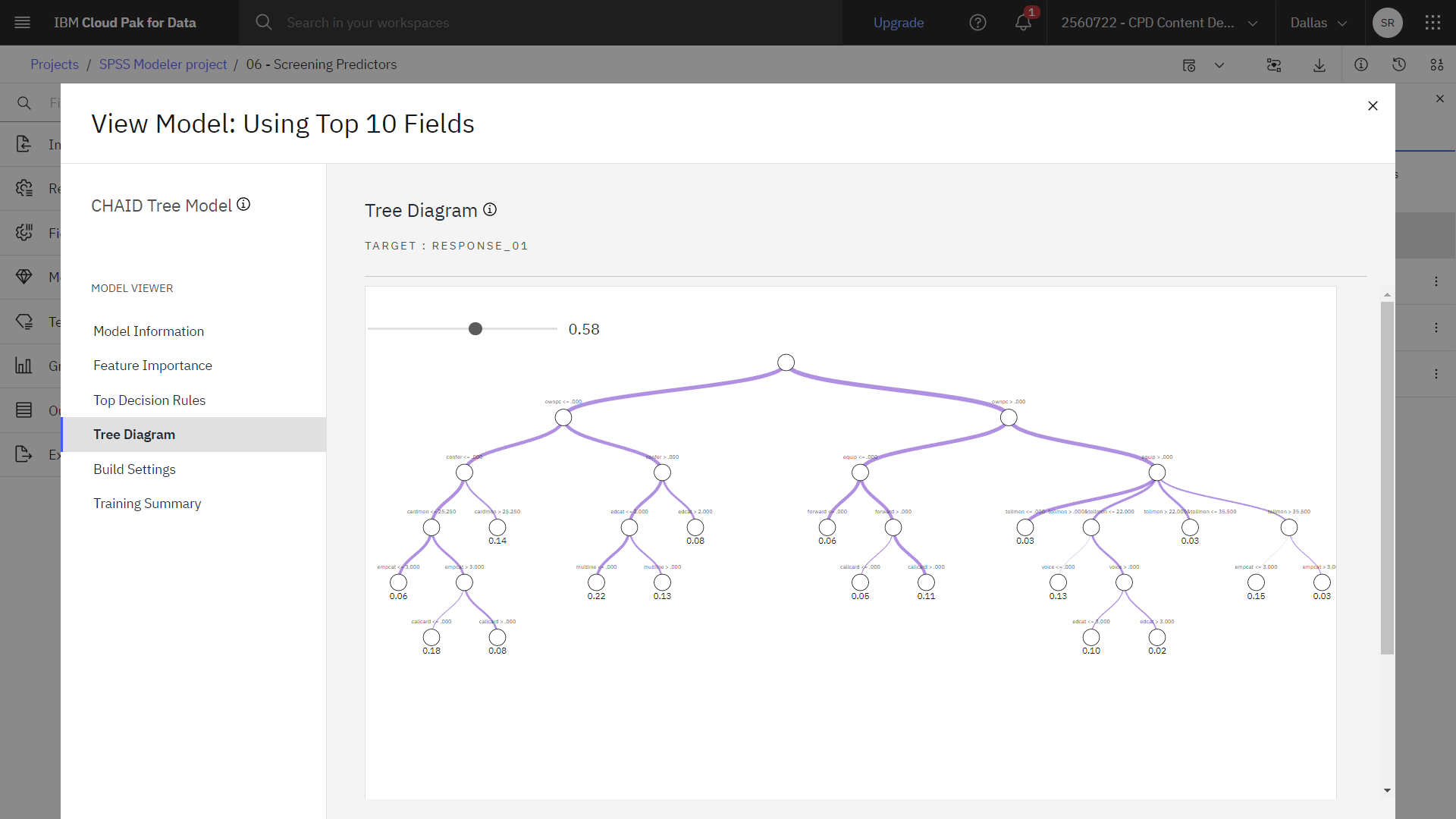

- In the Outputs and models pane, click the modelrun with the name Using Top 10

fields to view the results.

- Click the Tree Diagram page.

- Zoom out to see the scope of the tree diagram.

It might be hard to tell, but the second model ran faster than the first one. Because this dataset is relatively small, the difference in run times is probably only a few seconds; but for larger real-world datasets, the difference might be noticeable; minutes or even hours. Using feature selection might speed up your processing times dramatically.

You might instead use a tree-building algorithm to do the feature selection work, allowing the tree to identify the most important predictors for you. In fact, the CHAID algorithm is often used for this purpose, and it's even possible to grow the tree level-by-level to control its depth and complexity. However, the Feature Selection node is faster and easier to use. It ranks all predictors in one fast step, assisting you to identify the most important fields quickly.

Check your progress

The following image shows the tree diagram from the model.

Summary

The second tree also contains fewer tree nodes than the first. It's easier to comprehend. Using fewer predictors is less expensive. It means that you have less data to collect, process, and feed into your models. Computing time is improved. In this example, even with the extra feature selection step, model building was faster with the smaller set of predictors. With a larger real-world dataset, the time savings might be greatly amplified.

Using fewer predictors results in simpler scoring. For example, you might identify only four profiles of customers who are likely to respond to the promotion. With larger numbers of predictors, you run the risk of overfitting your model. The simpler model might generalize better to other datasets (although you need to test this approach to be sure).

Next steps

You are now ready to try other SPSS® Modeler tutorials.