Sending model transactions

If you are evaluating machine learning models or generative AI assets, you must send model transactions from your deployment to enable model evaluations.

To generate accurate results for your model evaluations continuously, you use must continue to send new data from your deployment.

Sending model transactions

Importing data

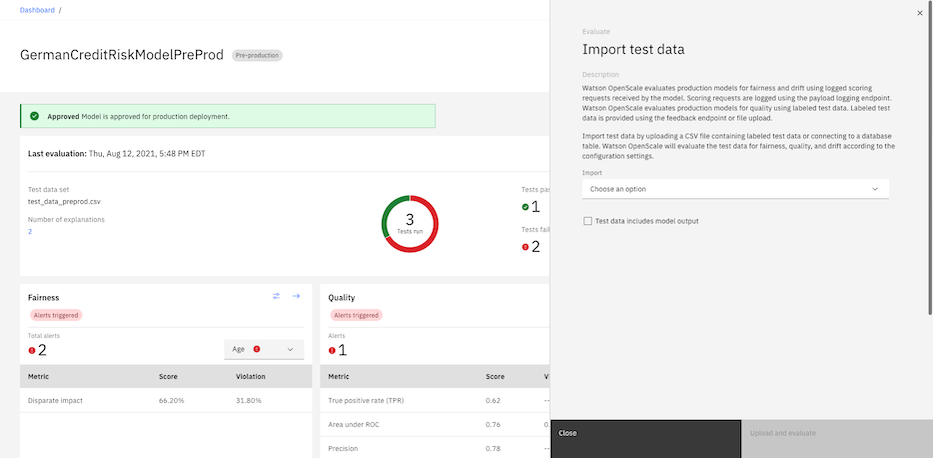

When you review evaluation results on the Insights dashboard, you can use the Actions menu to import payload and feedback data for your model evaluations.

For pre-production models, you can import data by uploading CSV files or connecting to data that is stored in Cloud Object Storage or a Db2 database.

If you want to upload data that is already scored, you can select the Test data includes model output checkbox. Cloud Object Storage does not rescore the test data when you select this option. The data that you import can also

include record_id/transaction_id and record_timestamp columns that are added to the payload logging and feedback tables when this option is selected.

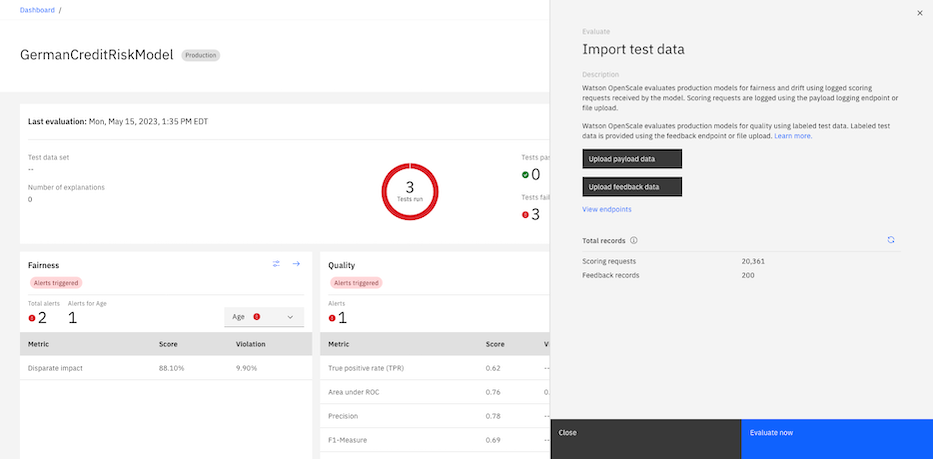

For production models, you can import data by uploading CSV files or using endpoints to send your model transactions.

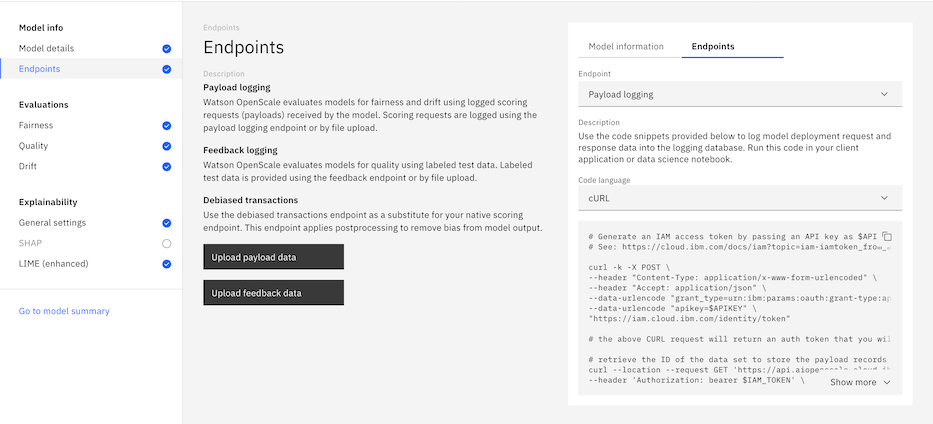

Using endpoints

For production models, endpoints are supported that you can use to provide data in formats that enable evaluations. You can use the payload logging endpoint to send scoring requests for fairness and drift evaluations and use the feedback logging endpoint to provide feedback data for quality evaluations. You can also upload CSV files to provide data for model evaluations. For more information about the data formats, see Managing data for model evaluations.

A debiased transactions endpoint that you can use to review the results of fairness evaluations is also supported. The debiased transactions endpoint applies active debiasing on your payload data to detect any bias in your model. For more information about active debiasing, see Reviewing debiased transactions.

You can use the following steps to send model transactions for your model evaluations with endpoints:

- On the monitor configuration page, select the Endpoints tab.

- If you want to upload payload data with a CSV file, click Upload payload data.

- If you want to upload feedback data with a CSV file, click Upload feedback data.

- In the Model information panel, click Endpoints.

- From the Endpoint menu, select the type of endpoint that you want to use.

- From the Code language menu, choose the type of code snippet that you want to use.

- Click Copy to clipboard

to copy the code snippet and run the code in your notebook or application.

to copy the code snippet and run the code in your notebook or application.

Logging payload data with Python

When you select the payload data endpoint from the Endpoints menu in Watson OpenScale, you can use the following code snippet to show you how to log your payload data:

from ibm_cloud_sdk_core.authenticators import IAMAuthenticator

from ibm_watson_openscale import APIClient

service_credentials = {

"apikey": "$API_KEY",

"url": "{$WOS_URL}"

}

authenticator = IAMAuthenticator(

apikey=service_credentials["apikey"],

url="https://iam.cloud.ibm.com/identity/token"

)

SERVICE_INSTANCE_ID = "{$WOS_SERVICE_INSTANCE_ID}"

wos_client = APIClient(authenticator=authenticator, service_instance_id=SERVICE_INSTANCE_ID, service_url=service_credentials["url"])

from ibm_watson_openscale.data_sets import DataSetTypes, TargetTypes

# Put your subscription ID here

SUBSCRIPTION_ID = "{$SUBSCRIPTION_ID}"

payload_logging_data_set_id = wos_client.data_sets.list(type=DataSetTypes.PAYLOAD_LOGGING, target_target_id=SUBSCRIPTION_ID, target_target_type=TargetTypes.SUBSCRIPTION).result.data_sets[0].metadata.id

from ibm_watson_openscale.supporting_classes.payload_record import PayloadRecord

# Put your data here

REQUEST_DATA = {

"parameters": {

"template_variables": {

"{$TEMPLATE_VARIABLE_1}": "$TEMPLATE_VARIABLE_1_VALUE",

"{$TEMPLATE_VARIABLE_2}": "$TEMPLATE_VARIABLE_2_VALUE"

}

},

"project_id": "$PROJECT_ID"

}

RESPONSE_DATA = {

"results": [

{

"generated_text": "$GENERATED_TEXT"

}

]

}

RESPONSE_TIME = $RESPONSE_TIME

wos_client.data_sets.store_records(data_set_id=payload_logging_data_set_id, request_body=[PayloadRecord(request=REQUEST_DATA, response=RESPONSE_DATA, response_time=RESPONSE_TIME)])

The "project_id": "$PROJECT_ID" value specifies that you want to log payload data for evaluations in projects. To log payload data for evaluations in spaces, you can specify the "space_id": "$SPACE_ID" value instead. You can use the Manage tab in projects and spaces to identify the project or space ID for your model.

Logging feedback data with Python

When you select the feedback data endpoint from the Endpoints menu in Watson OpenScale, you can use the following code snippet to show you how to log your feedback data:

from ibm_cloud_sdk_core.authenticators import IAMAuthenticator

from ibm_watson_openscale import APIClient

from ibm_watson_openscale.supporting_classes.enums import DataSetTypes, TargetTypes

service_credentials = {

"apikey": "$API_KEY",

"url": "{$WOS_URL}"

}

authenticator = IAMAuthenticator(

apikey=service_credentials["apikey"],

url="https://iam.cloud.ibm.com/identity/token"

)

SERVICE_INSTANCE_ID = "{$WOS_SERVICE_INSTANCE_ID}"

wos_client = APIClient(authenticator=authenticator, service_instance_id=SERVICE_INSTANCE_ID, service_url=service_credentials["url"])

subscription_id = "{$SUBSCRIPTION_ID}"

feedback_dataset_id = wos_client.data_sets.list(type=DataSetTypes.FEEDBACK, target_target_id=subscription_id, target_target_type=TargetTypes.SUBSCRIPTION).result.data_sets[0].metadata.id

fields = [

"{$TEMPLATE_VARIABLE_1}",

"{$TEMPLATE_VARIABLE_2}",

"{$LABEL_COLUMN}",

"_original_prediction"

]

values = [

[

"$TEMPLATE_VARIABLE_1_VALUE",

"$TEMPLATE_VARIABLE_2_VALUE",

"$LABEL_COLUMN_VALUE",

"$GENERATED_TEXT_VALUE"

]

]

wos_client.data_sets.store_records(

data_set_id=feedback_dataset_id,

request_body=[{"fields": fields, "values": values}],

background_mode=False

)

Parent topic: Managing data for model evaluations